AI’s Reckoning On Law and Order

Part Two of Revenant Research's Top Themes of AI in 2025

Revenant Research has identified three major themes for AI in 2025:

AI Sovereignty In The Second Cold War

AI’s Reckoning On Law and Order

AI’s Coming Cambrian Explosion For Enterprise Automation

This is Part Two.

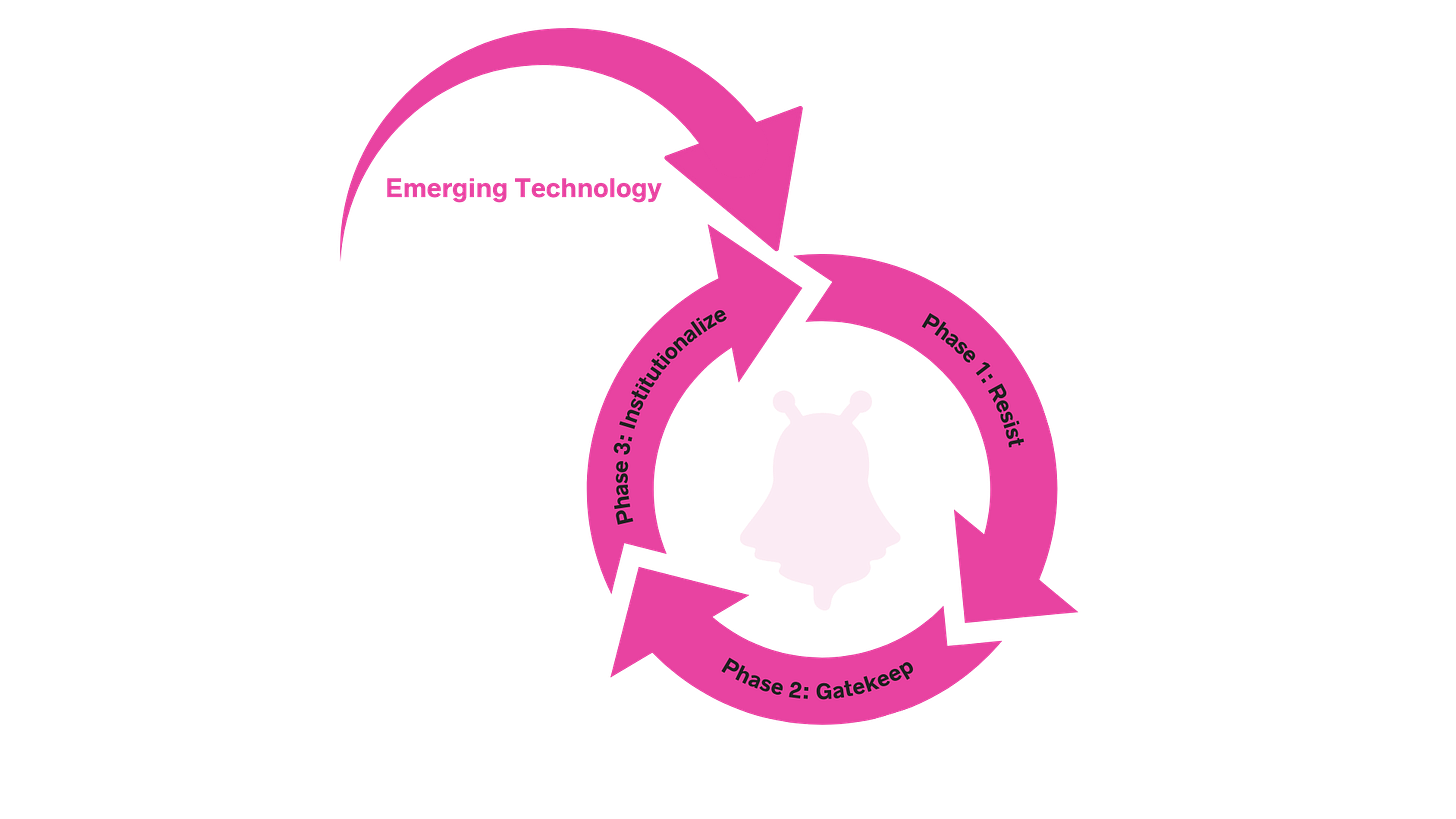

The legal field has historically followed a cyclical pattern in its adoption of disruptive technology—a cycle I call Resist–Gatekeep–Institutionalize Cycle (RGI Cycle). This model played out in response to the printing press, forensic science, DNA evidence, and even computers and has allowed the legal industry to adapt over long periods of time while maintaining traditional controls and predictability. However, AI challenges this cycle in ways that are far more existential than anything we have encountered before.

The Resist–Gatekeep–Institutionalize Cycle

Legal institutions are driven by a deep-seated imperative to uphold stability, predictability, and continuity. This conservatism stems from the foundational role of law as society’s anchor, ensuring that disruptions, however innovative, do not destabilize the legal order. Over centuries, this cautious ethos has given rise to a discernible pattern in how the profession interacts with technological change. The RGI Cycle allows us to understand the profession’s adaptive strategies. Each stage reflects a calculated response to innovation, calibrated to balance opportunity and risk:

Phase 1: Resist

Early reactions to disruptive technologies often stem from skepticism and fear, a protective instinct to shield the legal system and investments from perceived chaos or unreliability. Legal authorities highlight the risks—unproven methodologies, ethical dilemmas, and potential misuse—to justify their hesitation.

Phase 2: Gatekeep

As evidence accumulates that a technology has merit, the legal profession’s approach shifts to controlled gatekeeping. This phase heavily relies on the establishment of formal training and certification programs, designed to cultivate experts capable of managing and utilizing the technology responsibly. Courts, legislatures, and professional bodies not only define the parameters of use but also ensure that practitioners are equipped with the necessary expertise. This stage reflects a deliberate effort to preserve hierarchical control while minimizing systemic risks.

Phase 3: Institutionalize

Once thoroughly vetted and regulated, the technology becomes an integral part of legal practice. Over time, courts establish consistent rules, attorneys incorporate the technology into workflows, and lawmakers formalize its role through statutory frameworks. Commercial technology providers devise complex systems and certifications to embed themselves into legal processes- effectively creating a version of regulatory capture via legal precedence. This spawns specialized practice areas, generates new revenue streams, and subtly reconfigures the legal landscape.

This model has proven remarkably resilient, offering a structured approach to integrating innovations such as the printing press, forensic science, and computers. Yet, as subsequent sections will demonstrate, the scale, speed, and transformative potential of AI threaten to disrupt this well-trodden cycle, pushing the profession toward uncharted territory. While this model has held across diverse historical moments, AI’s scale, speed, and capacity to challenge ontological assumptions about responsibility and truth suggest we may be approaching a breaking point.

A Historical Survey of the RGI Cycle

The Printing Press: An Uncertain Beginning

Resist: When Johannes Gutenberg’s printing press emerged in the mid-15th century, it revolutionized the dissemination of information, including legal texts. Authorities and guilds, like England’s Stationers’ Company, quickly recognized the disruptive power of mass printing. Governments feared the chaos that might ensue if the public read legal texts and interpreted them without the guiding hand of licensed professionals.

Gatekeep: Over time, states realized the printing press could serve their interests if properly regulated. England’s Licensing of the Press Act of 1662 epitomized this stage by requiring printers to obtain licenses under the control of official authorities. Legal gatekeeping sought to ensure that only sanctioned interpretations of the law would proliferate.

Institutionalize: Eventually, widespread printing became indispensable. By standardizing legal texts and case reports, the press enabled more consistent jurisprudence, produced more educated attorneys, and promoted the rule of law on a societal scale. The printing press became woven into the fabric of legal infrastructure.

Forensic Science: Fingerprints, Photography, and the Courts

Resist: When fingerprint analysis was first introduced in the late 19th century, it faced significant skepticism. For example, in 1905, a British case known as "The Stratton Brothers" murder trial marked one of the first uses of fingerprint evidence. The defense heavily contested its reliability, arguing that fingerprints were unproven and subjective. Judges and lawyers debated whether this new method could withstand legal scrutiny, with critics emphasizing the lack of scientific consensus and standardized procedures at the time. Similarly, photographic evidence faced resistance as courts worried about potential manipulation, concerns that were later addressed through strict admissibility standards like authentication requirements under the Federal Rules of Evidence.

Gatekeep: As forensic techniques proved effective in identifying suspects, legal systems began setting standards for admissibility. In the United States, the Frye v. United States (1923) decision introduced the “general acceptance” standard, effectively gatekeeping which scientific methods could be used as evidence. Later, the Federal Rules of Evidence (FRE) 702 and the Daubert v. Merrell Dow Pharmaceuticals (1993) decision refined the criteria, requiring expert testimony to rest on reliable principles and methods.

Institutionalize: With these guidelines in place, forensic science moved into the mainstream. Labs across jurisdictions standardized procedures, and forensic evidence gained tremendous weight in legal proceedings. Debates persisted about contamination and error rates, but the practice was no longer novel or optional.

DNA Evidence: Transforming Justice and Exposing Mistakes

Resist: DNA profiling arrived on the legal scene in the mid-to-late 1980s. Early cases, such as People v. Castro (1989), highlighted legal skepticism regarding the reliability of lab processes, statistical interpretation, and potential privacy concerns. Critics pointed to how DNA could be mishandled or contaminated, and some courts were slow to accept it as conclusive proof.

Gatekeep: Once the scientific basis for DNA testing was deemed sound, courts and legislatures outlined strict protocols for collection, analysis, and chain of custody. The Innocence Protection Act (2004) in the United States required preservation of biological evidence in federal criminal cases, acknowledging both the immense power of DNA to confirm guilt or prove innocence and the need for strict evidentiary controls. Many states enacted specific statutes on how DNA evidence should be handled, creating a robust gatekeeping apparatus that controlled how, when, and by whom DNA could be tested.

Institutionalize: DNA evidence is now a cornerstone of modern criminal justice, relied upon to exonerate the innocent and secure convictions in a wide array of cases. Organizations like the Innocence Project have used DNA to overturn wrongful convictions, reshaping societal trust in the justice system. DNA analysis has become institutionalized to the point that failing to use it when available may be considered malpractice in criminal defense.

The Computer Revolution: From Data Handling to Automation

Resist: Early computerization in the mid-20th century encountered hostility from attorneys who dismissed digital files as less authoritative than paper records. Courts were cautious about e-discovery and the authenticity of electronic documents, reflecting a resistance to abandoning centuries of paper-based legal practice.

Gatekeep: As computers proved indispensable, gatekeeping took the form of new rules and guidelines. The Federal Rules of Civil Procedure (FRCP) introduced e-discovery provisions to control how digital information was requested, preserved, and shared. Many jurisdictions adopted electronic filing systems but required attorneys to register, undergo training, and follow strict protocols. This effectively placed the technology behind administrative and regulatory barriers.

Institutionalize: Today, court dockets and filings are almost exclusively digital in many jurisdictions. E-discovery is a well-established practice area, governed by institutionalized norms and specialized software. Despite early fears, the computerization of legal tasks became thoroughly woven into everyday practice.

The Three Waves of AI Disruption

To understand the scale of disruption AI introduces to the RGI cycle, we must examine its impact through three coming waves. Each represents a fundamental shift in the nature of legal systems and their ability to adapt. Resistance mechanisms collapse under the pace of AI evolution, gatekeeping falters as existing frameworks fail to address AI’s complexity, and institutionalization proves inadequate for a technology that continuously redefines itself and new synthetic realities.

1. Technical Architecture and Legal Disruption: The first wave will place enormous strain on legacy services and legal tech infrastructure within firms. AI’s probabilistic and self-modifying nature stands in stark contrast to deterministic software, which follows explicitly coded rules. Large-scale transformer models (e.g., GPT architectures) leverage dense neural networks with billions to trillions of parameters to produce outputs not strictly traceable to any single line of code. This opacity creates verification challenges for legal applications, which historically rely on transparent, reproducible processes. Consequently, law firms and investigative units find their legacy systems, rooted in complex business rules designed for regulatory capture, ill-suited to interface with AI tools. A shortage of AI-competent expert witnesses further intensifies this operational stress. Because the legal industry has resisted early shaping of emerging technology, attempts to resist advanced AI via conventional cautionary measures will prove insufficient.

2. Autonomy in Commercial Systems and Liability: The second will come from the commercial sector through the court system. As AI becomes embedded in autonomous systems, from self-driving vehicles to generative design platforms, traditional doctrines of fault and negligence are disrupted. The interaction of machine-learning algorithms with real-time data and layered feedback loops obscures clear lines of causation, complicating the legal assignment of liability. For instance, product liability frameworks falter when a defective outcome arises from a neural network’s emergent behaviors rather than an identifiable design flaw. The inability to pinpoint accountability contradicts long-standing legal principles that require a definitive responsible party. This second wave subjects courts to unprecedented doctrinal stress, demanding novel legal constructs or entirely new liability frameworks.

3. Synthetic Realities and Legal Precedents: The third wave will present an existential threat to institutionalization. Advancements in generative adversarial networks and diffusion models facilitate the production of ultra-realistic deepfakes, forging synthetic realities that erode traditional evidentiary standards. The courts’ reliance on visual and auditory authenticity is undercut by the ease with which AI can fabricate convincingly “real” media. Every single piece of deigital evidence will be required to be authenticated against AI manipulation. Agent systems will enable digital twins, autonomous decision-making, and synthetic realities. Unlike earlier technologies—where standardization and expert oversight eventually mitigated doubts about reliability, AI-generated realities evolve too rapidly for definitive institutional safeguards. This third wave poses a critical challenge by undermining the very nature of factual proof. The legal system, accustomed to “institutionalizing” technology through stable guardrails.

Projected Phases of Disruption:

1. Internal Stressors: Initially, law firms and investigative entities will experience acute internal pressures as AI disrupts existing workflows. Investments in legacy legal tech become liabilities, and the lack of in-house AI expertise exacerbates the problem. Legal technology risks becoming detached from the latest advancements in software and computing as commercial software development shifts to native-AI processes. Resistance is overwhelmed as the rapid pace of technological advancements renders traditional systems obsolete. This phase marks the beginning of an existential threat for firms unwilling or unable to adapt. Winning firms will fundamentally rethink their legal tech infrastructure, shifting from high cost-high complex legacy SaaS to custom aplications that are priced by low compute costs.

2. Courtroom Challenges: Following the internal collapse, a second wave of disruption emerges as autonomous AI systems dominate litigation. Cases involving self-driving cars, AI-generated designs, and automated decision-making systems bring unprecedented challenges to courtrooms, exposing gaps in liability frameworks and necessitating rapid legal reforms.

3. Synthetic Reality Crisis: The third and most destabilizing phase arises as synthetic realities infiltrate evidence. Courts are forced to contend with hyper-realistic fabrications that undermine the authenticity of digital media. Every single piece of digital evidence entered into court, from undercover recordings to CCTV footage to surveillance photos, will have to be authenticated against the potential generation or manipulation of an AI system- requiring a completely new set of tools and expertise not present in the current legal system. This phase breaks the institutionalization process because synthetic realities fundamentally alter the evidentiary standards and assumptions upon which the legal system operates. Unlike prior technologies that could be standardized and integrated into existing frameworks, the dynamic and evolving nature of AI-generated synthetic realities resists stabilization. Courts must not only verify the authenticity of digital evidence in real time but also contend with the erasure of objective benchmarks for truth. The resulting instability renders institutional protocols obsolete, forcing courts into a perpetual state of adaptation, which undermines the pace of the Resist-Gatekeep-Institutionalize cycle.

Analyzing the three waves reveals specific legal precedents and frameworks most vulnerable to AI disruption. This necessitates a systematic review to anticipate areas of vulnerability and implement adaptive solutions. Below is an outline of legal precedent that is likely to face challenge from the three waves.

Chronological and Categorized List of Laws and Statutes To Watch

MacPherson v. Buick Motor Co. (1916): Established proximate cause in product liability, requiring manufacturers to ensure product safety.

Copyright Act (17 U.S.C. § 102): Focused on human authorship, leaving AI-generated content without clear ownership protections.

Federal Rules of Civil Procedure (FRCP, 1938): Provisions like Rule 26 govern proportionality and relevance in e-discovery.

Stored Communications Act (SCA, 1986): Ensures privacy and data integrity for stored communications.

Electronic Communications Privacy Act (ECPA, 1986, amended 2001): Protects electronic communications from unauthorized access.

Daubert v. Merrell Dow Pharmaceuticals (1993): Set standards for admissibility of expert testimony in court.

U.S. Patent Act (35 U.S.C. § 101): Requires inventions to have a human "inventor."

Children’s Online Privacy Protection Act (COPPA, 1998, amended 2013): Imposes restrictions on the collection of data from children under 13.

General Data Protection Regulation (GDPR, 2018): Article 22 limits automated decision-making.

California Consumer Privacy Act (CCPA, 2018): Empowers consumers to control their personal data.

California Deepfake Laws (AB 730 and AB 602, 2019): Address malicious use of deepfakes in political and non-consensual contexts. These laws are too narrow, leaving gaps in evidentiary standards for deepfake manipulation in broader civil and criminal cases.

Federal Rule of Evidence 901: Governs authentication of evidence in court.

Federal Rule of Evidence 702: Regulates expert testimony, demanding a reliable basis for scientific or technical knowledge.Transformation Playbook For Law and Legal Services Firms

Discovery and Due Diligence:

Technology Assessment & Planning

- Audit current legal tech stack for AI compatibility

- Identify critical systems that need modernization

- Create budget allocation for AI infrastructure

- Review all vendor contracts with AI implications

Risk Management

- Update malpractice insurance to cover AI-related incidents

- Develop internal guidelines for AI tool usage

- Create documentation requirements for AI-assisted work

- Review client engagement letters to address AI usage

Training & Expertise

- Designate AI competency leads in each practice area

- Implement mandatory AI literacy training for all attorneys

- Create technical teams for AI evidence handling

- Establish partnerships with AI forensics experts

Medium-Term Implementation:

Infrastructure Development

- Begin phased replacement of legacy systems

- Implement new evidence authentication protocols

- Develop AI-ready document management systems

- Create secure environments for handling synthetic media

Practice Area Evolution

- Create specialized teams for AI-heavy cases

- Develop new billing models for AI-assisted work

- Establish protocols for autonomous system cases

- Build expertise in AI liability assessment

Long-Term Investment:

Organizational Transformation

- Restructure practice groups around AI competencies

- Build in-house AI development capabilities

- Create dedicated AI forensics unit

- Develop proprietary AI tools for legal analysis

Client Strategy

- Create AI advisory services for clients

- Develop AI risk assessment frameworks

- Build predictive analytics capabilities

- Establish thought leadership in AI law

Key Performance Indicators:

Measure Success Through

- Revenue from AI-related matters

- Efficiency gains from AI implementation

- Successful handling of AI evidence cases

- Staff AI competency levels

- Client satisfaction with AI capabilities

Budget Considerations:

Resource Allocation (Template)

- 30% to infrastructure modernization

- 25% to training and expertise development

- 20% to AI tools and capabilities

- 15% to risk management systems

- 10% to research and development

Change Management

- Regular communication about AI initiatives

- Clear metrics for success

- Continuous feedback loops

- Regular strategy adjustments